AI Down to Earth #3: Artificial brain, GPT-5 is a disappointment, 10x power efficient LLMs, Hardware is hot

Each week I bring you the latest major advancements and news in AI, along with other intriguing insights, all tailored specifically for business leaders.

Intro

First of all, it’s great to have you here! I created this newsletter to translate the latest AI advancements, technicalities, and news into a digestible format for business leaders. My goal is to help you understand how AI impacts the modern workforce and how emerging trends are reshaping our world.

You’ll learn how to make sense of AI and learn to navigate a world that is inevitably becoming more complex.

Thanks for reading AI Down To Earth! Subscribe for free to receive new posts weekly and support my work.

In this week’s edition:

Artifical brain for a rat

GPT-5 will be a disappointment?

10x power efficient LLMs

Hardware eats software?

Virtual rat with artificial brain

DeepMind, Google's AI division, has introduced a groundbreaking virtual rat that mimics the movements of a real rodent through an artificial brain.

According to the researchers, this virtual rat replicates the "evolutionary marvel" of animal movement.

The project is the result of a collaboration between neuroscientists at Harvard University and researchers at DeepMind in the UK. Together, they successfully simulated the digital creature using movement data recorded from real rats.

To facilitate this, we built a ‘virtual rodent’, in which an artificial neural network actuates a biomechanically realistic model of the rat in a physics simulator. We used deep reinforcement learning to train the virtual agent to imitate the behavior of freely-moving rats, thus allowing us to compare neural activity recorded in real rats to the network activity of a virtual rodent mimicking their behavior.

[…]

These results demonstrate how physical simulation of biomechanically realistic virtual animals can help interpret the structure of neural activity across behavior and relate it to theoretical principles of motor control.

This virtual rat can serve as a foundation for other artificial intelligence simulations, potentially revolutionizing neuroscience research. It could help explore how neural circuits are compromised in diseases and how real brains generate complex behaviors.

GPT-5 will be a disappointment?

OpenAI CTO Mira Murati recently stated that the company's models are "not that far ahead" of the free versions available to the public. This suggests that OpenAI does not have a GPT-5 level model ready to be released soon. Or could this be an attempt to downplay expectations before a major announcement?

We will need less power for LLMs?

The very interesting paper - Scalable MatMul-free Language Modeling describes how researchers have managed to create a large language model (LLM) without using matrix multiplication (MatMul), which is the main reason for GPU utilization.

They reduced RAM requirements by 60% during training and by 90% during inference. Most importantly, they succeeded in running the LLM on custom hardware that requires only 13W of power (Transformers typically need hundreds).

This breakthrough means not only that larger models can be run on mobile devices, but also that in the future, there might not be a need to build nuclear power plants for every data center, which potentially mean lower costs of running LLMs for businesses in the future.

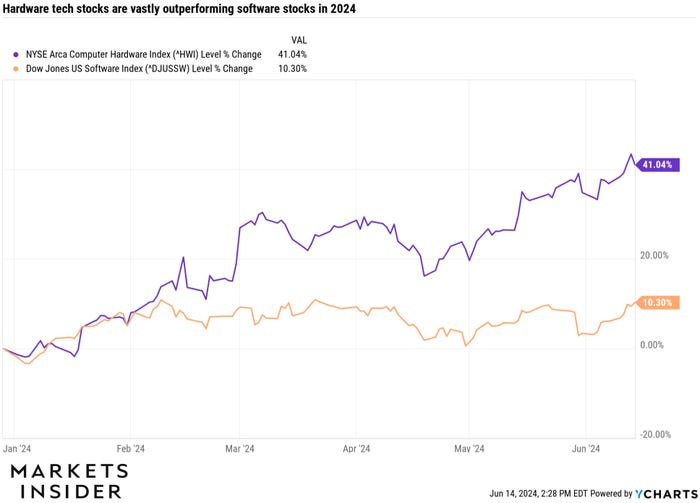

Hardware is on fire

Marc Andreessen's famous 2011 proclamation about the rise of software has held true for the past decade. However, generative AI is now shining a spotlight on a less glamorous part of tech: hardware.

A prime example of this shift is last week's unveiling of Apple Intelligence. While much of the buzz centered on Apple's rumored partnership with OpenAI, its crucial behind-the-scenes relationship with Google to train AI models was just as significant, according to Business Insider’s Hugh Langley.

The vast data-center footprints of companies like Google, Microsoft, and Amazon are proving invaluable during the generative AI boom, as large-language models require specialized hardware for training—much like bodybuilders needing a gym. However, there are limited "gyms" available, prompting unique partnerships between tech giants, such as Apple and Google or Oracle and Microsoft.

This GenAI cycle is infrastructure, all infrastructure," Mortonson told Business Insider this week. "The cloud titans are now spending $200 billion this year, which is up 50% on data centers. That's the horsepower, or the engine of Gen AI.

30% of the Fortune 500 have moved to the cloud. 10% of that are Gen AI capable. So, we have a long way to go for software and an acceptable return on invested capital to materialize. That's why you're not seeing it in software. There is no return on invested capital because there's no applications. So, you're building the car and the engine, but you have no passengers in software," Mortonson said.

Mortonson explained that one of the key challenges for companies to make good use of generative AI technologies is the need to organize and structure their data in a format that can be understood by Gen AI.

That process can take 15 months at minimum, and based on Mortonson's recent conversations with software-focused tech executives, very few of them have even started the process.

Thank you!

I appreciate any feedback you may have! Please feel free to reply directly to this email with your thoughts and leave a comment👇

I am the Founder and CEO of Softwise.AI, where we specialize in helping companies implement AI-powered solutions.